Humanity is fighting back against natural disasters, using artificial intelligence to predict, monitor and alleviate catastrophes.

Tech giant Google is using artificial intelligence to predict the paths of floods and location of earthquake aftershocks.

In Britain, a volcanologist at the University of Bristol has been adapting AI to monitor and predict volcanic eruptions, the scientific journal Nature reports this month. The technique includes learning to spot the formation of ground distortions around volcanoes and what that means.

In California, two students are seeking to predict bushfires. Sensors in the ground collect data about the moisture in the biomass. They measure the presence of dead leaves and branches and absence of moisture. The hope also is that sensors will reduce the need for fire prevention crews physically to inspect vast amounts of forest.

The ability to make sense of natural-disaster data already collected, and detect deviations in new data, shapes as a powerful new tool. The hope is that AI in conjunction with geomorphic, weather and satellite imagery data will not only warn people about danger but also will model how a disaster will pan out.

That could be of great assistance in Australia, where bushfires and floods claim lives.

Google’s AI project on flooding is about identifying the course of water in a flooding event and warning of its path. The biggest challenge is achieving this quickly.

“We arrived at trying to forecast floods just because of their immense, terrible impact affecting hundreds of millions of people every year,” project leader Sella Nevo tells The Australian.

“On the other hand, on a more optimistic note, also the great ability to prevent a good portion of those fatalities. Early warning systems have been shown across the board to be very effective in reducing fatalities.”

He says Google is tackling floods in stages. In northeast India, it already has piloted a scheme where it collects satellite imagery and, from it, generates high-resolution elevation maps.

The project requires higher-resolution data than what’s available from Google Maps. Researchers instead convert two-dimensional satellite image maps into detailed 3-D ones.

“We need to remove various structures that can confuse the model; for example, if you have trees, the satellite will think the ground is above the trees … you need to be able to take them out,” Nevo says.

“If you have bridges, the satellite sees that the river is blocked. So we use what’s called convolution neural networks to remove all of these artefacts and create what’s called the digital terrain model, which is an elevation map without these things.”

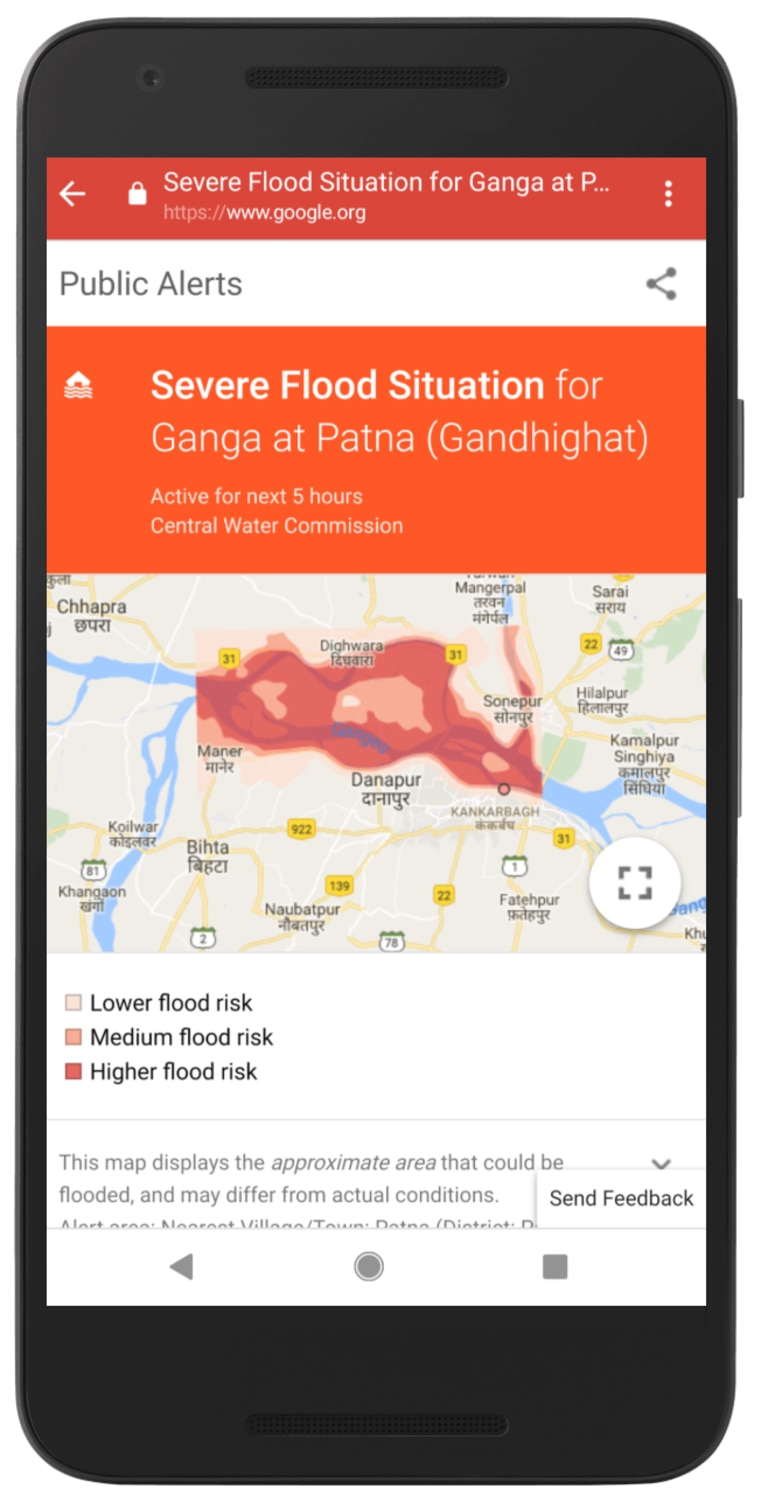

He says the Central Water Commission in India has been providing the water levels inside the country’s rivers as well as forecasts. “Based on these two inputs, we can then try to model how the water will behave across the floodplain and then show what areas are going to be affected.”

An alert system warns people on their smartphones of any high risk of flooding in their area. The alerts are sent to users as notifications and made available to local government and non-government organisations. They may be delivered by physical means such as by motorbikes for people without phones and when flooding knocks out communication systems.

The alerts system already has been piloted in India, where modelling predicted a high risk of flooding with more than 90 per cent accuracy.

The next phase is to offer people more detailed information about the course of floods and integrating it into Google Maps so they can see where the predicted floods are in relation to them. “That’s one of the several things we’re working on,” Nevo says.

However, cost is an issue. Thousands of computer central-processing units are needed to run simulations whose inputs can dramatically change with prevailing conditions.

Nevo says Google’s modelling is based on local government upstream measurements, and modelling offers three to 24 hours of warning time for residents, but only for large rivers.

Google is improving its modelling with a hydrological model that estimates how much water will be discharged based on precipitation forecasts. Nevo hopes this new system can provide flooding information for days ahead of the initial event.

Even this technology aimed at predicting riverine floods is no help in dealing with the lightning-fast flash flood experienced in Toowoomba and the Lockyer Valley in Queensland in 2011. Nevo says flash floods are on Google’s agenda. “We definitely intend to look more into flash floods, but they’re much more challenging in terms of the data that you need.”

While the initial concentration is developing countries, he says there would be “incredible value” in rolling out the simulations to Australia in future.

While large earthquakes are not so prevalent in Australia — in the past 50 years only a handful of serious tremors have occurred at Newcastle, NSW; Meckering and Cadoux, Western Australia; and Tennant Creek in the Northern Territory — a project conducted jointly by Harvard University and Google wants to predict the aftershocks once an earthquake strikes.

Brendan Meade, professor of earth and planetary science at Harvard University, and a Google project lead, says the frequency of aftershocks decreases with time and follows a well-established empirical law, but the unknown quantity is where aftershocks occur.

His approach is to take information about past large earthquakes and aftershocks, and use machine learning to predict future aftershocks for new earthquakes.

Meade says existing earthquake catalogues offer a full three-dimensional description of what part of the earth ruptured with past quakes. The research involves combining this information with a catalogue of aftershocks.

But there’s a problem. High-quality earthquake and aftershock data has been available only since the late 1990s. The accumulation of it takes time as the mean recurrence time between larger earthquakes is longer than a human lifetime.

“Even in California, the mean recurrence time for large earthquakes in the San Andreas Fault exceeds 100 years,” he says. “So fundamentally we’re working in a data-limited field. This is in contrast to many things in machine learning where you have huge amounts of data.”

Researchers therefore are taking a different approach, calculating how stressed the earth is after an earthquake event. An AI system modelled on the human brain and called a neural network finds the best combination of stresses to predict where the aftershocks will occur. The researchers use seismic measurements, GPS data and radar technologies.

Meade says mapping of the earth’s crust is “astonishingly good” in some places, but it’s quality is inconsistent.

“We’re working very hard and as diligently as we can towards having better insights about where and when earthquakes are going to happen, but the reality is, it’s still early days,” he says.

Published in The Australian newspaper.